Fixing the fundamental mistake in web form processing

Forms series—part 2

This article is part two of the forms series:

Intro and Part 1:

Part 2: Fixing the fundamental mistake in web form processing (this article)

There is a problem in the foundation of today’s most popular web frameworks. It’s been there all along, but maybe wasn’t apparent in the early days. It still doesn’t seem to be apparent to most developers. At least not directly, because what these frameworks’ maintainers have done is address the symptoms of this problem without ever acknowledging the cause.

In this article and the entire series, I’m referring to traditional, server-side web frameworks. I’ve known for a long time that they’re the simplest choice for building forms. Here’s how most of these frameworks route a form’s HTTP requests1:

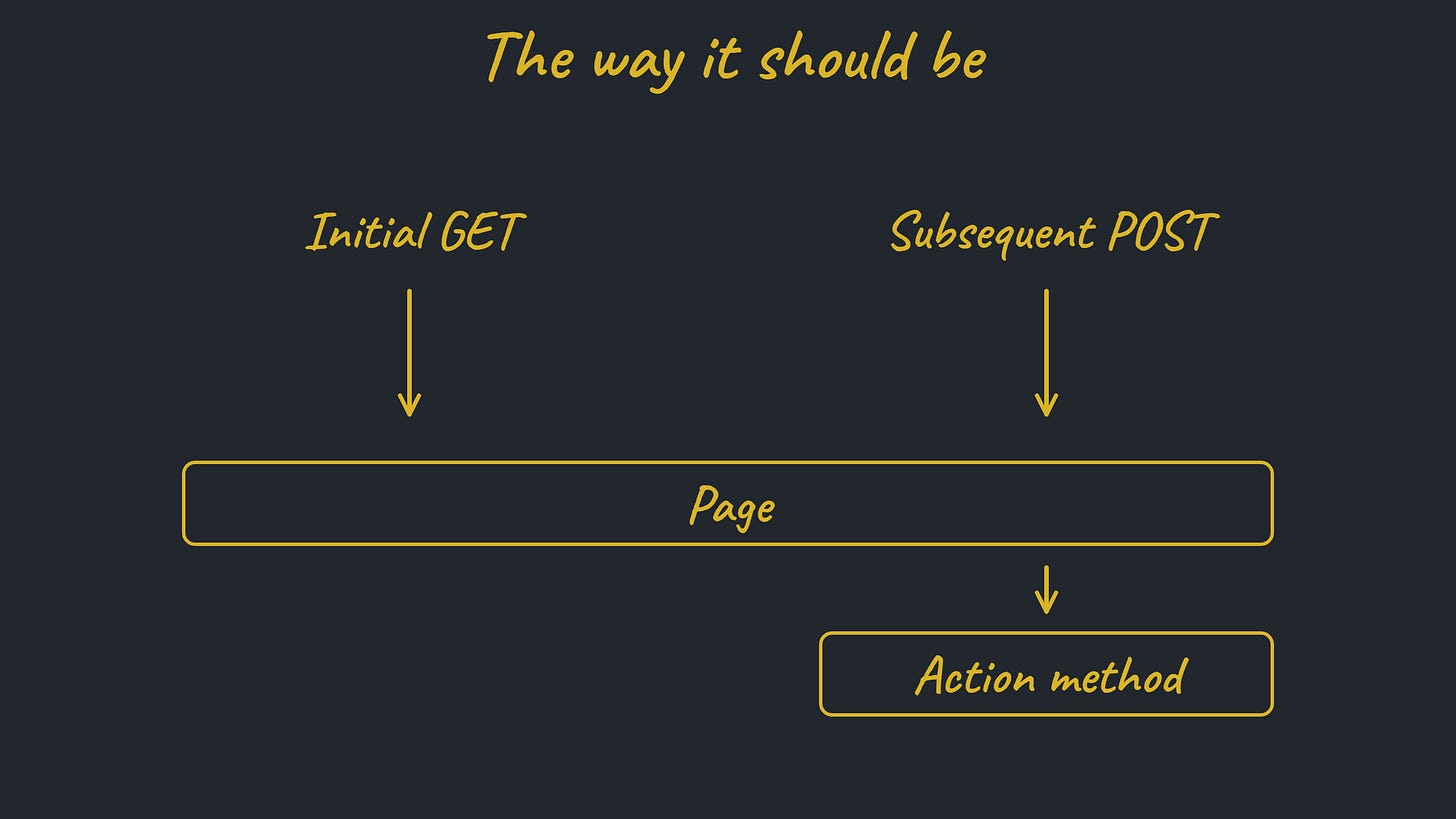

GET requests are routed to a view, which renders the form. POST requests are routed to an action method, which processes the submitted data.The problem with routing form submissions directly to action methods is lack of context. The action method receives a raw list of form data in the request body and needs to make sense of it. And making sense of it requires duplicating knowledge that is already in the view, namely which data entities and fields exist on the form and what modifications are permitted. Frameworks have partially addressed this with features like structured naming of form controls, which enables automatic mapping to trees of model objects.

But there are essential problems with this architecture that cannot be overcome. Developers must restate the list of form fields in the action to defend against mass assignment attacks. For any drop-down widget, they must reestablish the list of options that was shown to the user, to ensure that a malicious, handcrafted POST request cannot succeed in selecting a choice meant to be unavailable. Data types and formats must match those used by the rendered form. The list goes on, and complexity multiplies when the form expands with new features. It’s all an unnecessary maintenance burden.

Why did we do this? Why did we throw Don’t Repeat Yourself under the bus? Because of the popular belief that spending the time to fully rebuild the view before even beginning to process the submission can’t possibly be a good idea. That would mean…building two views (or the same view twice) on a single request! How could such a flow ever perform well enough for production use?

Try to set this concern aside for a minute. Think about a humbler design. What if we simplify things, reduce our maintenance costs, and, yes, admit that we don’t need absolute maximum performance?

ASP.NET Web Forms: The Good Part

I began my career building apps with the ASP.NET Web Forms framework from Microsoft. The critics are right: it got a lot wrong. But one thing it got right was routing form POST requests back to the page that created the form, and enabling the page to “rebuild itself” before receiving the field values.

The Enterprise Web Framework (EWF) in EWL borrows this concept; you can see how it translates to code in the previous article. And back to performance: while it might seem expensive to rebuild the page on POST, in my experience it’s never been a problem. I’ve also learned that a framework can use deferred execution (as EWF does) to avoid fully rebuilding the page all the way through to a finished HTML document. It can merely create enough of a skeleton to know what and where the form fields are.

Zooming in on fields

Take the expression for the movie price control from the previous article:

movieInsert.GetPriceFormItem( minValue: 0, maxValue: 100 )As I mentioned there, this is all you need for an HTML input type=number control that is bound to the movie price field. It handles the control, the label, the validation rules, the display of error messages. Mass assignment attacks are not possible, so there’s no other place where you need to whitelist the price field, or blacklist other movie fields. And again, as I stated in that article, there is no model class anywhere that supports this page. There is only the database, and automatically generated code.

The formatting of the decimal number as a string, for HTML, and the parsing of a submitted string back into a number, are both encapsulated within this expression. It is not possible to have any type of a formatting mismatch. If you needed to use some type of custom widget with its own formatting requirements, you would likewise have both translations (number → string and string → number) defined in the same place.

And take the drop-down list with movie ratings:

movieInsert.GetRatingDropDownFormItem(

DropDownSetup.Create(

getRatings().Select( r => SelectListItem.Create( r, r ) )

)

)

IReadOnlyCollection<string> getRatings() => [ "G", "PG", "PG-13" ];The same list of ratings is used for rendering and for validation. It’s impossible for a value that is not listed to make it into the database via this form, even if such a value were valid according to database integrity constraints. This is not just a maintenance time saver; it’s also the complete elimination of a security risk.

Imagine having a drop-down list for setting a user’s role, where the two choices are “editor” and “normal user.” What if there were a third role, “admin,” defined in the database but not accessible on this particular page? Do you see the value of knowing, for sure, that the “admin” value cannot be set maliciously through your page? The value of never having to open up Postman or another HTTP manipulation tool to test for such an attack vector?

Do you see the power, and superiority, of integrating validation/POST logic into the form-rendering code?

In MVC frameworks like Rails, all requests are routed to controller actions, but GETs are typically forwarded immediately to a view, where an HTML-based template will render the form. POSTs are routed to a different action within the same controller that persists the submitted data.

In a component-based framework such as Blazor (in static server-side rendering mode), requests are routed to pages. GET causes the Razor/HTML portion (i.e. the view) of the page to render, and POST executes a C# method in the page’s code block. But the method has no awareness of or dependency on the view.